Aspects to consider when introducing artificial intelligence and machine learning applications in healthcare.

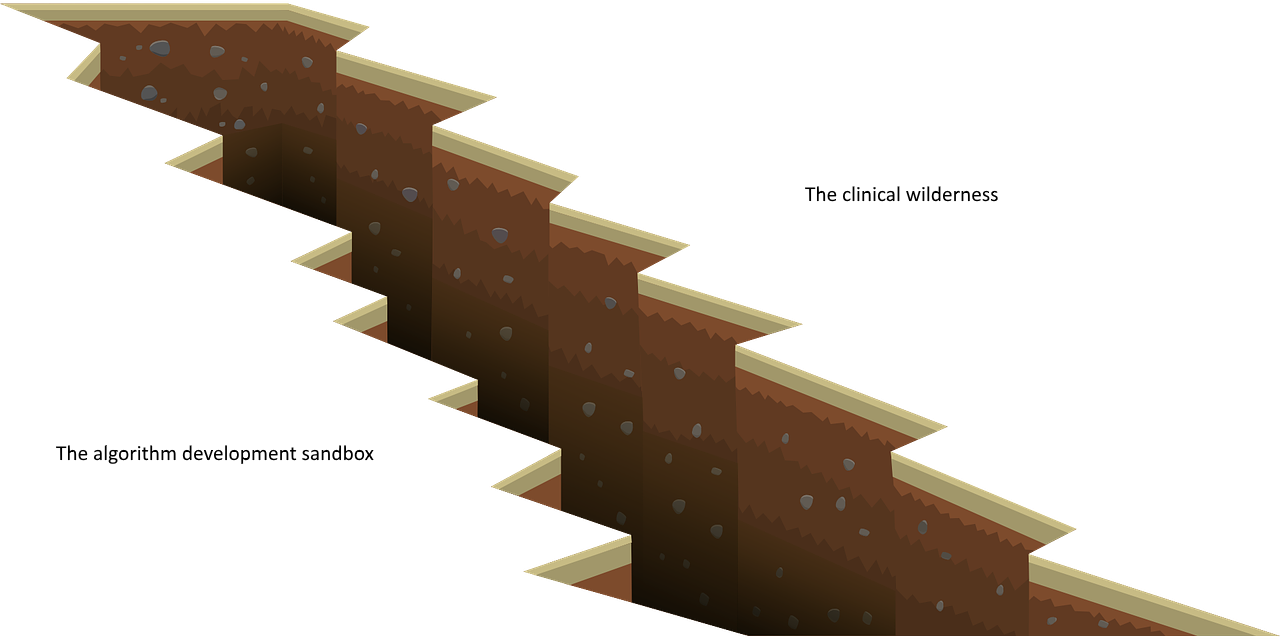

Today, far too many articles and blog posts on the web suggest that artificial intelligence (AI) and machine learning (ML) is some sort of magic pill that can easily be taken to ensure that all and any problems within healthcare will disappear overnight. As anyone who has been involved in introducing change can attest, change is difficult and often a slow process. Medical practices and healthcare IT is not an exception. Hence, it is not surprising to see today’s AI and ML hype with great hopes and expectations surrounding it but where actual implementations and deployments in clinical practice is more of a dream than a reality. One of the reasons to this is because of the divisive chasm between the controlled sandbox where algorithm development happens and the clinical wilderness where healthcare happens.

In this context, the algorithm development sandbox denotes an environment with typically a very limited number of data samples. Data samples that have been cleaned to fit a task independently chosen by a group of algorithm developers. Furthermore, the environment is static and void of actual end users. Note that the algorithm developers in this setting are not limited to academic researchers but rather to any group of people involved in algorithm development in an environment separated from clinical healthcare personnel. The clinical wilderness, on the contrary, contains an abundance of data (entire populations) and where the data comes in a variety of formats. The data itself is often dirty, as claimed adhered to standards are at best followed loosely. In addition, this environment is constantly changing. For example, a modality sending images of a certain format cannot be guaranteed to send in the same format a week later when its software has been upgraded.

Hence, moving from the algorithm development sandbox to the clinical wilderness is associated with several challenges:

- Providing and demonstrating clinical value

- Accessing relevant training data

- Building user-friendly AI/ML applications

- Deployment and integration with clinical workflows

Providing and demonstrating clinical value

This is probably the most important aspect to consider and something that many algorithm developers realize as soon as they leave their sandbox and meet actual intended end users. New technology and cool algorithms are not enough. To develop algorithms that will be used in clinical care, the developers/researchers need to focus on problems that are of importance to the end users (the healthcare personnel), the management of the end users or the customers of the end users (the patients). For example, will the algorithm make the physicians more efficient or even more effective, or will it allow the physicians to provide care that was not earlier possible to provide? Once a real clinical problem has been identified, it is also important to determine the volume. Solving a frequently occurring problem can provide a high clinical value even if the solution itself is not remarkable, whereas a very innovative solution to an almost never occurring problem is of very low clinical value.

Accessing relevant training data

“Data is the new oil!” We have all heard this and it is especially true for ML where access to data is key when training new algorithms. Over the past decade a lot has happened in terms of open access and making even medical image data available. For instance, The Cancer Imaging Archive or Grand Challenges in Biomedical Image Analysis are great sources for anyone looking for medical image data to train their algorithms. However, these sources can only get you so far as the data is often limited in number of samples and sources. Hence, to ensure robustness of any trained algorithm, it becomes important to establish access to additional data sources. For academic researchers, this can often be achieved by establishing a collaboration with a local hospital (often easier if it is an academic medical center), although this does not really solve the problem with a limited number of data sources. For a commercial group, it is also possible to form collaborations with hospitals, but here it is important to ensure that a win-win situation is established. It cannot be expected that hospitals will readily hand over their data with nothing in return.

Building user-friendly applications

Algorithm developers have a tendency to think that all that matters is their algorithm, that is as long as an algorithm provides sufficient accuracy (however “sufficient” and “accuracy”, respectively, are defined) the user will be content. This is, however, not the case. An ill-designed user interface can render an excellent algorithm useless, whereas a well-designed user interface can turn a mediocre algorithm into a highly useful tool.

Another aspect of this is that algorithms are not perfect. Hence, user friendly AI applications that ensures that failed predictions are easily spotted and handled are essential, especially in healthcare.

Deployment and integration with clinical workflows

The final step, once an algorithm has been trained with relevant and sufficient data, and a user-friendly interface has been developed, is to deploy the application in a clinical setting. Something that often turn out to be rather challenging.

Healthcare IT is not what it used to be decades ago. Ten to fifteen years ago it might have been possible to place a workstation/server with random hardware (HW) and software (SW) at a hospital, hook it up to the local network and have the end users test the application. Today’s healthcare IT is a lot more standardized and with significantly more security routines in place, which is good, but which makes it difficult, especially for non-established entities, to deploy their new AI applications. What kind of HW and SW will the application run on? HW and SW that is a part of the standardized healthcare IT environment or something else? Will the application run on premise or in the cloud? How is access to protected health information handled? Who will have access to this information? What patient safety and security measures are in place? These are just some of all the questions that will asked by the healthcare IT team.

Once all these questions relating to the deployment have been handled and answered satisfactorily, there is still the challenge of integrating your AI application within an existing workflow. Similar as discussed regarding building user-friendly AI applications, it is not enough to make the application available to the end users, it must tie into their existing workflows, as your application is not the only application the end users have at their disposal. For example, switching workstations to access another application is most of the time out of the question, and even switching applications on the same workstation is frowned upon. A developer might think that changing workstations or applications are not that cumbersome, but if you do it multiple times per day and each time you lose a few seconds or even minutes of productivity, then your willingness to work with a new application will decrease drastically.

Both of these aspects, deployment and integration, will be a lot easier to handle for the developers of AI applications if the local hospital has an IT system in place capable of integrating 3rd party applications through standardized protocols and interfaces.

Summary

Training and deploying AI/ML algorithms for healthcare is not just about the algorithms. On the contrary, the algorithms are just one of many aspects to consider. In this article, we have briefly looked at some of these, (1) providing and demonstrating clinical value, (2) accessing relevant training data, (3) building user-friendly applications and (4) deployment and integration with clinical workflows.

If interested to find out more about how Sectra and our work to integrate external ML applications within our systems can help you to establish a connection between new ML applications and end users, such as radiologists and pathologists, please reach out directly to me or join us at an upcoming event.

This article was originally published on LinkedIn: From the algorithm development sandbox to the clinical wilderness